Invisible UX Is the Future, But Human-in-Command Is the Bridge.

When I used Windsurf to debug code line by line, I thought I needed help with code. I got a teammate instead.

I’m not a software developer. I can read code and follow logic, but when it comes to syntax errors or tracking down weird bugs, what a pain.

Recently, I tried Windsurf, an AI tool that helps you walk through code execution line by line inside your editor.

And something clicked.

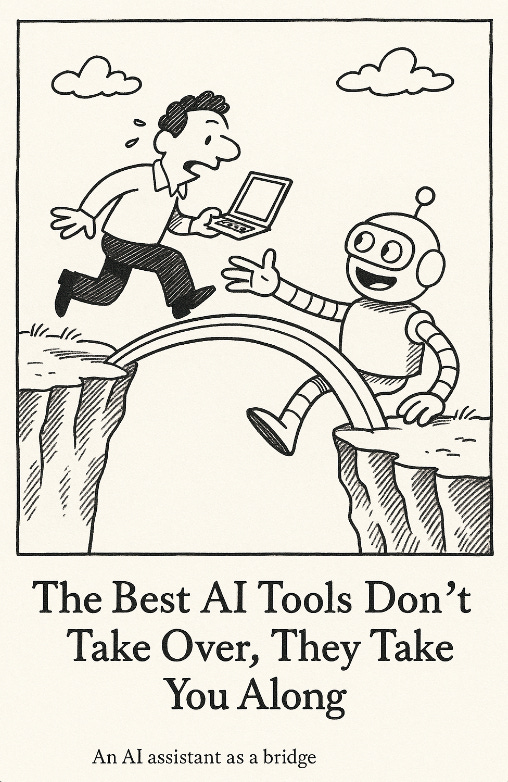

🤝 The Best AI Tools Don’t Take Over, They Take You Along

Windsurf didn’t just drop in code and say, “Here, trust me.”

It guided me step by step.

It showed me what each line was doing

It asked for input when things were unclear

It let me confirm or correct before continuing

It explained failures in a way that made sense

I finished the task, but more importantly, I understood it. I knew what to do next time. And that’s when it clicked.

This is the kind of AI experience people actually want right now.

Not magic. Not total automation. Just helpful support that keeps you in control.

👥 Human-in-Command vs. System-in-Command

As AI tools evolve, two design modes are becoming clear.

System-in-Command means the assistant takes full control. It interprets your intent, runs actions, and gives you a result without asking for input.

Human-in-Command means the assistant surfaces decisions and shows its reasoning. It lets the user stay involved and guide the outcome.

The industry is moving toward System-in-Command. Invisible UX. Frictionless automation. But most users, especially in technical or high-stakes environments, aren’t ready to give up control. They want to stay in the loop, not be left out of the decision.

🤖❤️ Trust Isn’t Automatic

Using Windsurf felt different because it explained itself.

I could see where the data came from

I knew what it was about to do

I understood why it made the decision it did

It didn’t just solve the problem. It earned my confidence along the way. And that made all the difference.

🧠 Designing for Human-in-Command

If we want people to trust and adopt AI tools, we need to meet them where they are. We need to build for Human-in-Command interactions where:

The assistant explains, rather than assumes.

It shows its work, rather than hiding logic.

It invites feedback, rather than forcing outcomes.

It surfaces only what matters, not every possible detail.

It helps users learn, not just finish.

🛠️ Technical Foundations of Human-in-Command Systems

To support Human-in-Command experiences, AI assistants need more than a friendly interface. They need architectural support to make trust, control, and learning possible.

Planner Transparency

Output step-by-step reasoning that can be shown to the user when appropriate.

Agent Reasoning and Memory

Store short-term interaction history and make logic traceable through thought summaries.

Disambiguation and Clarification

Detect vague inputs and respond with clarifying questions instead of guessing.

Data Provenance

Connect every result to a source, such as an API call, document, or dashboard.

User-in-the-Loop Controls

Ask for confirmation on important actions and allow users to explore the “why” if they want.

Preference and Feedback Integration

Learn from what users approve, correct, or ignore to improve future interactions.

These aren’t just technical checkboxes. They are the building blocks of trust. Without them, assistants feel opaque. With them, they feel like a partner.

🌉 We’re Not Building the Finish Line. We’re Building the Bridge.

Invisible UX is where we’re headed. But most people aren’t ready to hand over the keys.

They want help, not handoff. They want to learn, not just finish. They want to understand, not just observe.

When tools guide people well, they walk away more capable. More confident. And more likely to come back. That’s the kind of UX we should be designing for today.

Let’s build the bridge.